This tutorial will describe howto upload large files to Azure file share using azCopy and PowerShell. First time I tried to upload large files to Azure I was a bit optimistic and tried to copy and paste my files directly through remote desktop. My files where database files with around 200gb of data using an internet connection with about 5mbit/s in upload speed. It did not work out very well and I had issues with timed out/aborted file transfers. Then I tried to use plain PowerShell and Set-AzureStorageBlobContent to upload my files to an azure file share however it still timed out or got interrupted.

To solve this issue I used an azure file share, PowerShell and and azCopy to do the task. azCopy is a command line utility that lets you copy data to Azure Blob, File, and Table storage. Recent versions also supports to resume failed and interrupted uploads.

This tutorial assumes you already have a working Azure subscription and that you have configured Azure for use with PowerShell:

https://azure.microsoft.com/en-us/documentation/articles/powershell-install-configure/

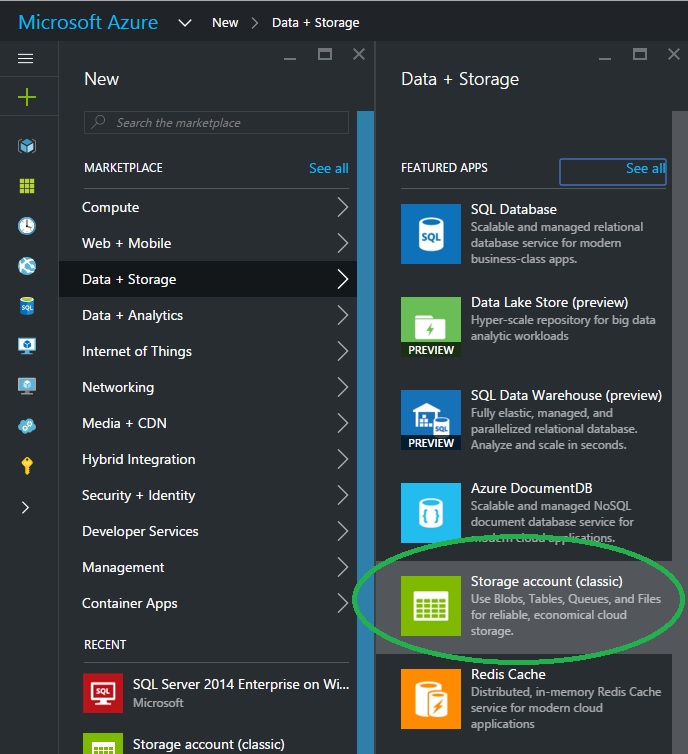

Before we can upload any files to Azure we will need to store them somewhere so go ahead and create an Azure file share.

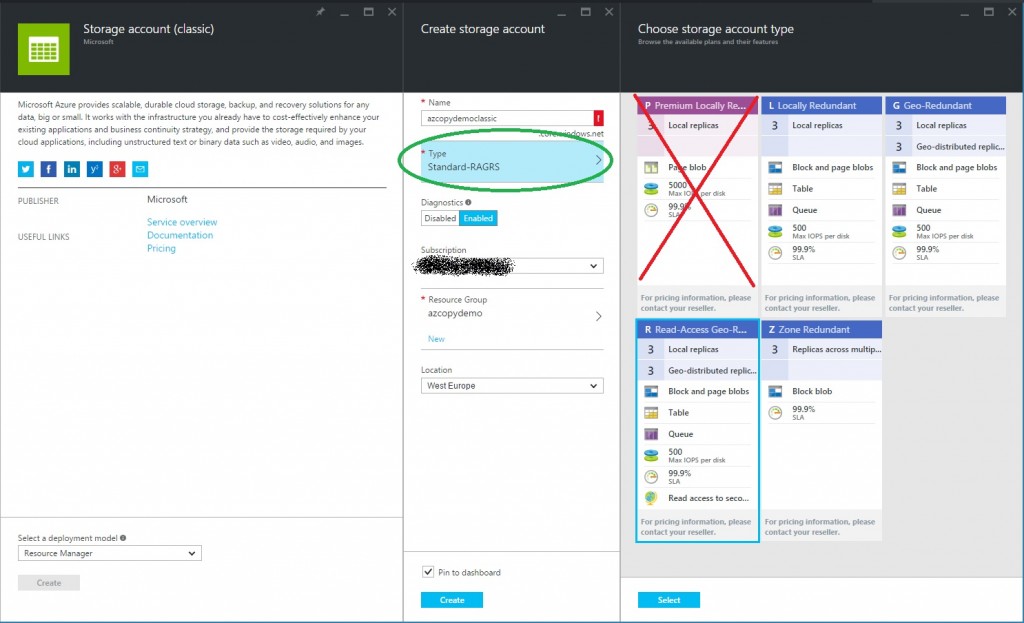

Create Azure file share

Do not choose any premium storage since it does not work as a file share. Choose a standard storage type. (Standard-RAGRS for example):

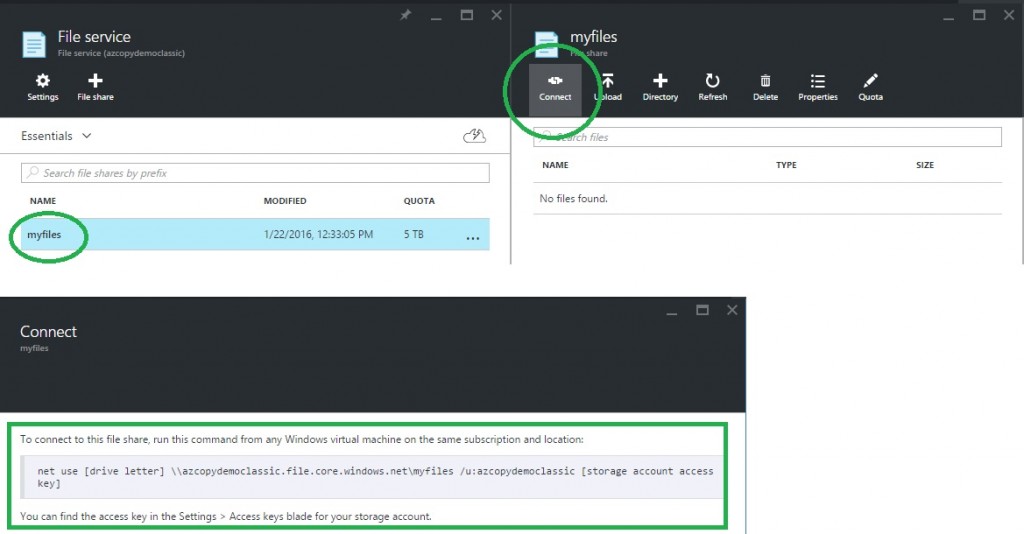

The file share can be used as a network drive on your virtual machine(s). When your file share is created select it and click connect to get the command to mount it as a network drive:

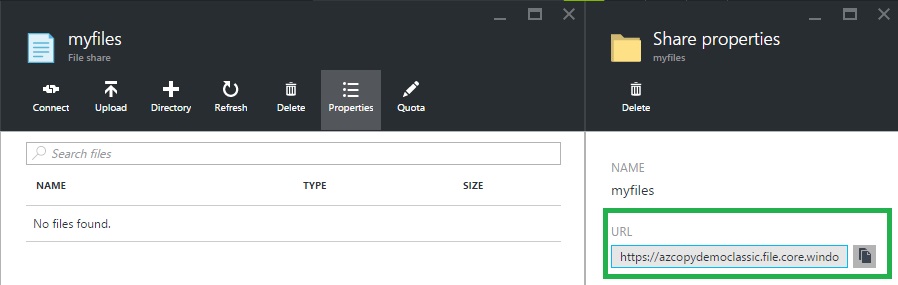

If you click properties you will see the URL of the file share:

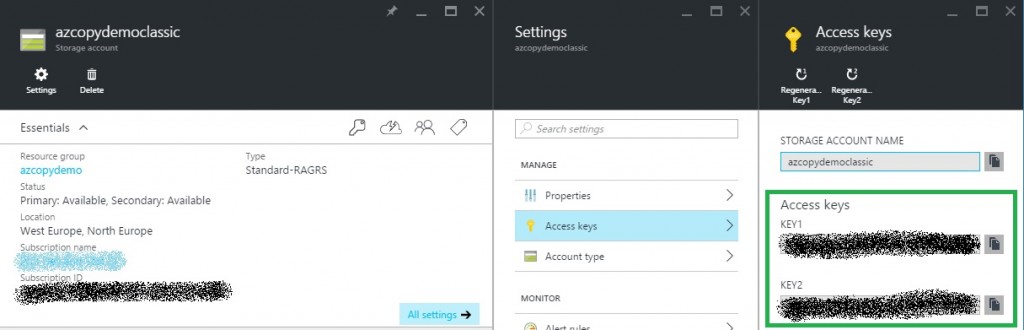

In order to connect to the file share you will need the access key which can be found on the main page of the storage account under access keys:

Keep the access key and the URL. It will be needed when we run our PowerShell script and azCopy later on.

Install azCopy

Download and install:

Run azCopy PowerShell script

azCopy will by default install to C:\Program Files (x86)\Microsoft SDKs\Azure\AzCopy. When transferring files azCopy will keep a journal in %LocalAppData%\Microsoft\Azure\AzCopy which can be used to resume failed file transfers.

Modify the script below to fit your needs:

$azCopyPath = "C:\Program Files (x86)\Microsoft SDKs\Azure\AzCopy"

Set-Location $azCopyPath

$accountName = "azcopydemoclassic"

$accountKey = "ABC123-YOUR-KEY-GOES-HERE"

$dest = "https://azcopydemoclassic.file.core.windows.net/myfiles/"

$filesToTransfer =

"C:\Projects\Azure\test1.zip",

"C:\Projects\Azure\test2.zip";

foreach ($file in $filesToTransfer) {

$fileItem = Get-ChildItem $file

$path = $fileItem.DirectoryName + "\"

$fileName = $fileItem.Name

Write-Host "Path: " $path -ForegroundColor Yellow

Write-Host "Filename: " $fileName -ForegroundColor Yellow

$transferResult = .\AzCopy.exe /Source:$path /Pattern:$fileName /Dest:$dest /destkey:$accountKey /Y

$transferResult

[int]$f = $transferResult[5].Split(":")[1].Trim()

$azJournal = "$env:LocalAppData\Microsoft\Azure\AzCopy"

$i=1

while ($f -gt 0) {

$i++

[int]$f = $transferResult[5].Split(":")[1].Trim()

$transferResult = .\AzCopy.exe /Z:$azJournal /Y

$transferResult

$i

}

}

I just wanted to let you know. I modified your script by declaring the $azjournal at the begining, and i included an if statement so that i could test to see if an existing transfer had been abandoned. This way i could resume immediately.

If (Test-Path $azjounal\AzCopyEntries.jnl) {

$azJournal = “$env:LocalAppData\Microsoft\Azure\AzCopy”

$i=1

while ($f -gt 0) {

$i++

[int]$f = $transferResult[5].Split(“:”)[1].Trim()

$transferResult = .\AzCopy.exe /Z:$azJournal /Y

$transferResult

$i

}

Very cool script, how can i make it upload content of a folder with files and subfolders, instead of just declaring 1 or 2 files?

Hello Im trying to run the script uploading a 4gb video but it stays in line 19-20 and it doesn’t finish, what should I do?